Scenario 1

I have 2 remote servers, Server1 is live and Server2 is backup.

Server1 is already live and cron job is not running. I don’t want to install cron job in Server1 for some reasons.

Server2 has cron job running and is going to connect to Server1 and execute the backup script daily-backup-script-v2.sh every 1 AM.

Open crontab in Server2

$ crontab -e

Press Insert button in the keyboard to edit.

#Minutes Hours Day of Month Month Day of Week Command

#0 to 59 0 to 23 1 to 31 1 to 12 0 to 6 Shell Command

Append the command to run

0 1 * * * ssh user@Server1 sh /mnt/extradisk/daily-backup-script-v2.sh

Press ESC button to quit editing, type :wq! press Enter to save changes and quit editing.

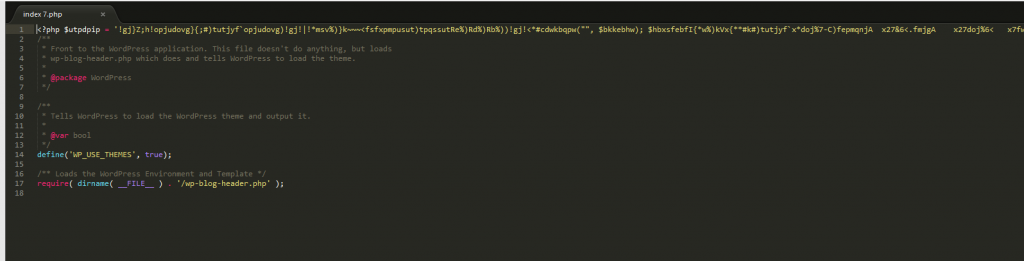

Below is the script for daily-backup-script-v2.sh

#!/bin/bash

#START

weekday=$(date +"weekday_%u")

file="/mnt/extradisk/backups/database_$weekday.sql.gz"

mysqldump -u user -ppassword --all-databases | gzip > $file

scp -P 10022 $file user@Server2:~/folder-daily-backups/

domain="/mnt/extradisk/backups/daily-backup-domains_$weekday.tar.gz"

tar -cpzf $domain -C / usr/share/glassfish3/glassfish/domains

scp -P 10022 $domain user@Server2:~/folder-daily-backups/

#END

The above script dumps all mysql databases and zip them into a file.

It also backups glassfish files and zip them.

Both zips are copied from Server1 to Server2 for remote backup.

Scenario 2

Both servers have running cron job.

Server1 is going to execute it’s backup script every after 3 hours

0 */3 * * * sh ~/backups/backup-script.sh

backup-script.sh code below

#!/bin/bash

#START

hour=$(date +"hour_%H")

file="/home/user/backups/database_$hour.sql.gz"

mysqldump -hipaddress -u user -ppassword database | gzip > $file

#END

Server2 will get Server1’s backups every 1 AM

0 1 * * * scp -P 10022 user@Server1:~/backups/* ~/BACKUPS/project/

Note: Server1 has Server2’s public key id_rsa.pub in its authorized_keys, vice versa.

$ date --help

to see more date formats